Compute eXpress Link (CXL) is an open standard that allows

a) Host to access externally connected pool of DDR memory space with simple load/store commands at low latencies.

b) A host server to expand its memory map to acquire DDR space statically or dynamically as per program requirements.

c) Devices like GPGPU that sit outside the system to snoop host caches and be cache coherent with the host system for efficient processing.

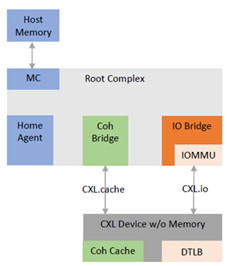

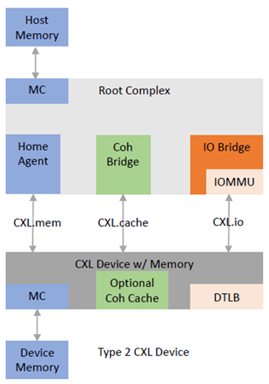

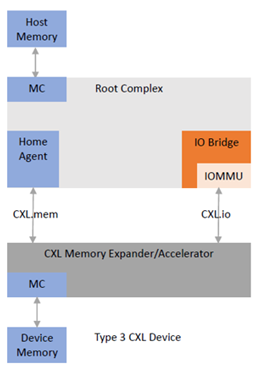

CXL defines three primary protocols:

CXL.io (It’s same as PCIe)

CXL.mem (for memory access by load/store)

CXL.cache (to manage coherency and memory consistency)

The CXL protocol CXL.cache/mem allows low latency accesses to devices connected on CXL link. CXL define following types of devices as per the interfaces. Note, CXL.io is a required in all device types as its used for configuring the device.

Type 1 Device

A device that acts as a cache for the Host. Eg: A Final Level Cache implementation outside the CPU bus.

Type 2 Device

A device that has a cache and a memory. Its memory is in the memory map of the host. Eg GPGPU accelerators.

Type 3 Device

Device like memory expanders which can have a volatile DDR memory pool. This pool of memory can be shared with multiple hosts in the system. This allows the host to expand its memory as per the workload load requirements.

CXL is built on top of PCIe system from software point of view, hence it has its own share of configuration registers and memory register. CXL specification has gone through many revisions and the most notable ones are CXL 2.0 and CXL 3.0.

CXL configuration space is identified with DVSEC ID 1e98. The enumeration of a CXL device follows the same sequence as a PCIe device. However, there is a difference in the way the packets are exchanged. CXL is flit based only. It supports 68 bytes flit and 256 bytes flit. Flits are fixed sized packets that are helpful in keeping the latencies low on the interface.

The memory registers are accessed using BAR registers programmed in register allocators during enumeration. The memory registers, also called component registers, defines the capabilities of the CXL device related to Snoop filter, RAS, link, security etc. The HDM decoder capability helps define the host system address range of the memory pool for a Type 3 device. It’s programmed by the host during enumeration as per the system requirement.

The CXL PHY is a FLEX BUS type and can support both PCIe and CXL transactions. The LTSSM of CXL has the same stages like PCIe with modified TS1/TS2 exchanged to set the link to run CXL or PCIe. The Link layer as defined by CXL is different from PCIe as it is flit based and has an element called ARB-MUX which arbitrates between CXL.io and CXL.cache/mem to send flits to the PHY interface.

When a host after identifying the CXL links in the system, follows LTSSM transitions and after establishing the links the CXL driver runs through enumeration to detect CXL devices, its topology and its capabilities just like its been done for any PCIe devices for past many years.

The CXL specification has a lot of features architected to support system scalability within the latency limitations. I will just list the few features and avoid explaining them as the purpose is to introduce CXL in minimum possible way.

Few CXL Features

- Data rate of 64 GT/s

- Memory Pooling with Multi Logical Devices

- Direct Memory Access for peer to peer

- Global Persistent Flush

- Port Based Routing (Multi Level Switching)

- Trusted Execution Environment

The detailed CXL specification can be found here (https://computeexpresslink.org/)

CXL is a step forward to solve hardware scalability issues in datacenter and it has its own set of challenges that the engineering community is resolving to get CXL running in the field.